UPDATE 04/01/2016

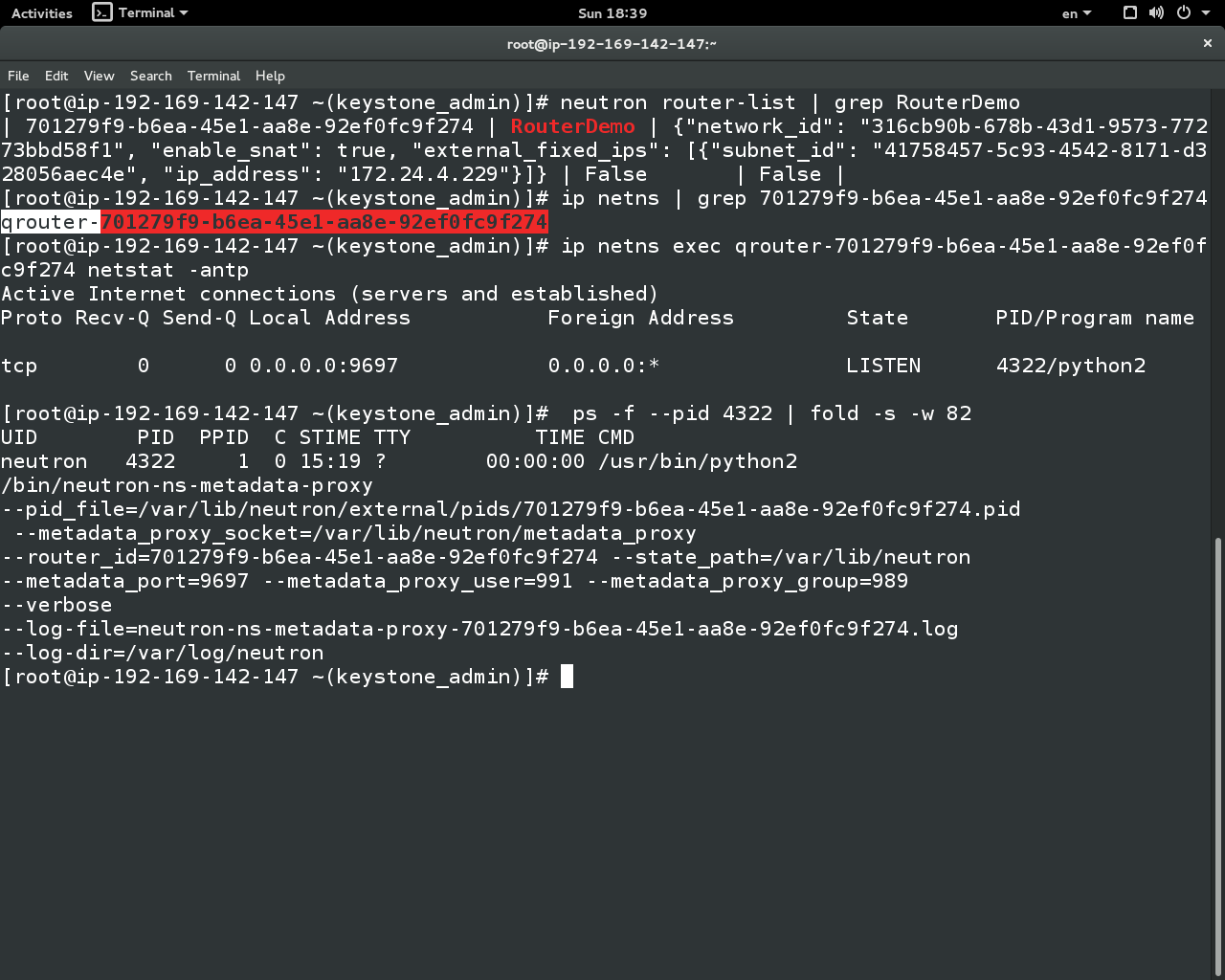

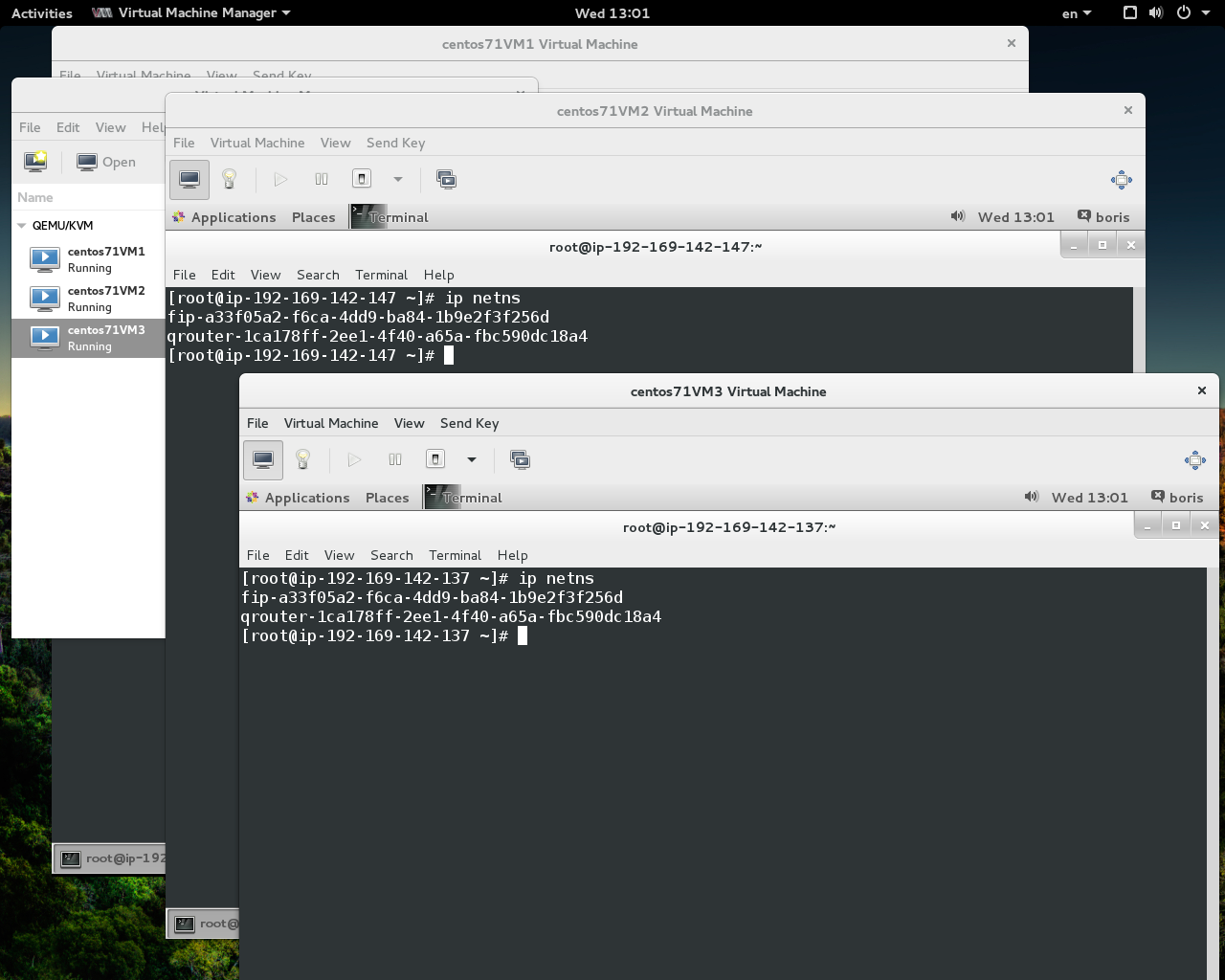

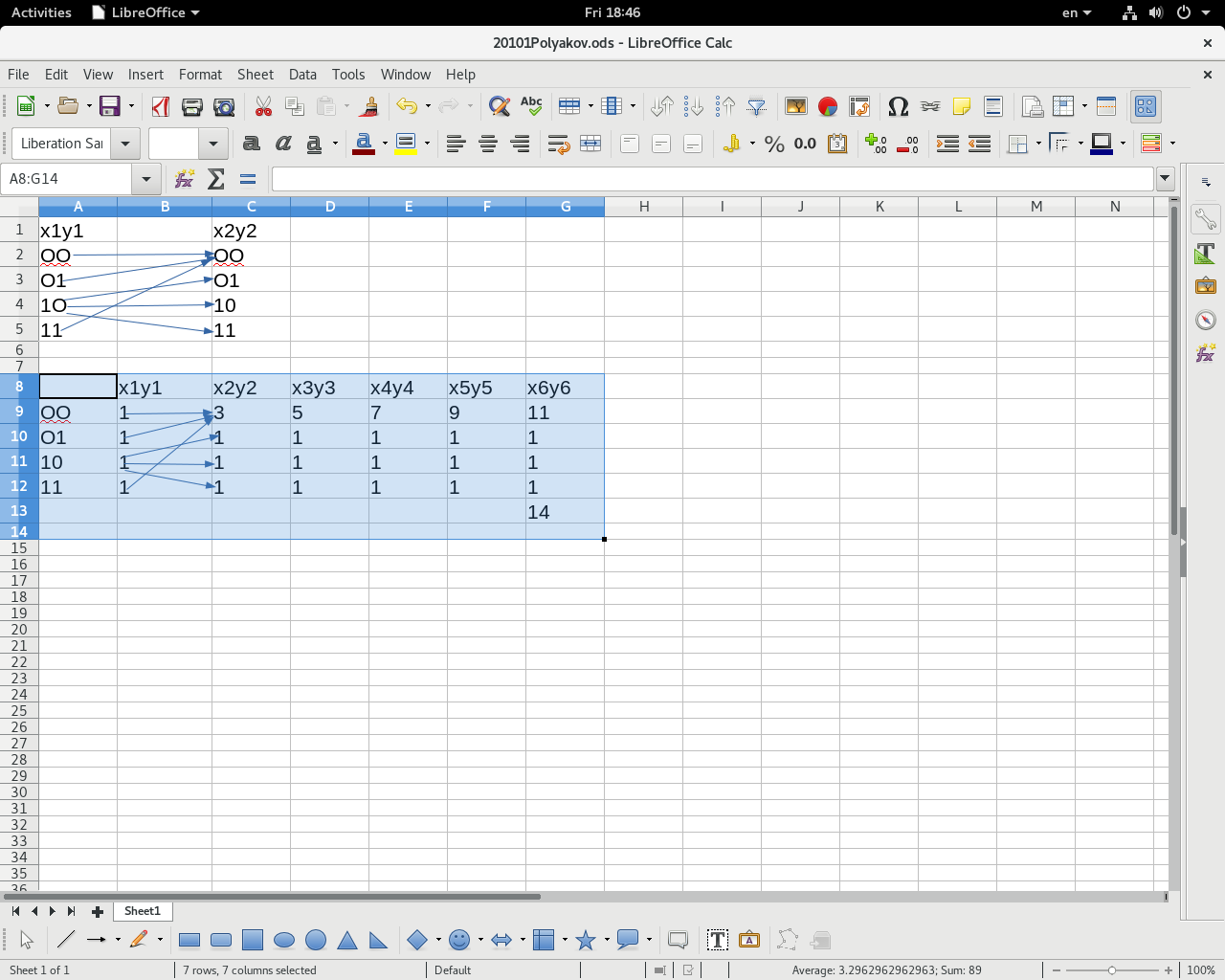

DVR && Nova-Docker Driver (stable/mitaka) tested fine on RDO Mitaka (build 20160329) with no issues discribed in link for RDO Liberty.So, create DVR deployment with Contrpoller/Network + N(*)Compute Nodes. Switch to Docker Hypervisor on each Compute Node and make requiered updates to glance and filters file on Controller. You are all set. Nova-Dockers instances FIP(s) are available from outside via Neutron Distributed Router (DNAT) using “fg” inteface ( fip-namespace ) residing on same host as Docker Hypervisor. South-North traffic is not related with VXLAN tunneling on DVR systems.

END UPDATE

Perform two node cluster deployment Controller + Network&Compute (ML2&OVS&VXLAN). Another configuration available via packstack is Controller+Storage+Compute&Network.

Deployment schema bellow will start on Compute node ( supposed to run Nova-Docker instances ) all four Neutron agents. Thus routing via VXLAN tunnel will be excluded . Nova-Docker instances will be routed to the Internet and vice/versa via local neutron router (DNAT/SNAT) residing on the same host where Docker Hypervisor is running.

For multi node node solution testing DVR with Nova-Docker driver is required.

For now tested only on RDO Liberty DVR system :-

RDO Liberty DVR cluster switched no Nova-Docker (stable/liberty) successfully. Containers (instances) may be launched on Compute Nodes and are available via theirs fip(s) due to neutron (DNAT) routing via “fg” interface of corresponding fip-namespace. Snapshots here

Question will be closed if I would be able get same results on RDO Mitaka, which will solve problem of Multi Node Docker Hypervisor deployment across Compute nodes , not using VXLAN tunnels for South-North traffic, supported by Metadata,L3,openvswitch neutron agents with unique dhcp agent proviging

private IPs and residing on Controller/Network Node.

SELINUX should be set to permissive mode after rdo deployment.

First install repositories for RDO Mitaka (the most recent build passed CI):-

# yum -y install yum-plugin-priorities

# cd /etc/yum.repos.d

# curl -O https://trunk.rdoproject.org/centos7-mitaka/delorean-deps.repo

# curl -O https://trunk.rdoproject.org/centos7-mitaka/current-passed-ci/delorean.repo

# yum -y install openstack-packstack (Controller only)

********************************************

Answer file for RDO Mitaka deployment

********************************************

[general]

CONFIG_SSH_KEY=/root/.ssh/id_rsa.pub

CONFIG_DEFAULT_PASSWORD=

CONFIG_SERVICE_WORKERS=%{::processorcount}

CONFIG_MARIADB_INSTALL=y

CONFIG_GLANCE_INSTALL=y

CONFIG_CINDER_INSTALL=y

CONFIG_MANILA_INSTALL=n

CONFIG_NOVA_INSTALL=y

CONFIG_NEUTRON_INSTALL=y

CONFIG_HORIZON_INSTALL=y

CONFIG_SWIFT_INSTALL=y

CONFIG_CEILOMETER_INSTALL=y

CONFIG_AODH_INSTALL=y

CONFIG_GNOCCHI_INSTALL=y

CONFIG_SAHARA_INSTALL=n

CONFIG_HEAT_INSTALL=n

CONFIG_TROVE_INSTALL=n

CONFIG_IRONIC_INSTALL=n

CONFIG_CLIENT_INSTALL=y

CONFIG_NTP_SERVERS=

CONFIG_NAGIOS_INSTALL=y

EXCLUDE_SERVERS=

CONFIG_DEBUG_MODE=n

CONFIG_CONTROLLER_HOST=192.169.142.127

CONFIG_COMPUTE_HOSTS=192.169.142.137

CONFIG_NETWORK_HOSTS=192.169.142.137

CONFIG_VMWARE_BACKEND=n

CONFIG_UNSUPPORTED=n

CONFIG_USE_SUBNETS=n

CONFIG_VCENTER_HOST=

CONFIG_VCENTER_USER=

CONFIG_VCENTER_PASSWORD=

CONFIG_VCENTER_CLUSTER_NAMES=

CONFIG_STORAGE_HOST=192.169.142.127

CONFIG_SAHARA_HOST=192.169.142.127

CONFIG_USE_EPEL=y

CONFIG_REPO=

CONFIG_ENABLE_RDO_TESTING=n

CONFIG_RH_USER=

CONFIG_SATELLITE_URL=

CONFIG_RH_SAT6_SERVER=

CONFIG_RH_PW=

CONFIG_RH_OPTIONAL=y

CONFIG_RH_PROXY=

CONFIG_RH_SAT6_ORG=

CONFIG_RH_SAT6_KEY=

CONFIG_RH_PROXY_PORT=

CONFIG_RH_PROXY_USER=

CONFIG_RH_PROXY_PW=

CONFIG_SATELLITE_USER=

CONFIG_SATELLITE_PW=

CONFIG_SATELLITE_AKEY=

CONFIG_SATELLITE_CACERT=

CONFIG_SATELLITE_PROFILE=

CONFIG_SATELLITE_FLAGS=

CONFIG_SATELLITE_PROXY=

CONFIG_SATELLITE_PROXY_USER=

CONFIG_SATELLITE_PROXY_PW=

CONFIG_SSL_CACERT_FILE=/etc/pki/tls/certs/selfcert.crt

CONFIG_SSL_CACERT_KEY_FILE=/etc/pki/tls/private/selfkey.key

CONFIG_SSL_CERT_DIR=~/packstackca/

CONFIG_SSL_CACERT_SELFSIGN=y

CONFIG_SELFSIGN_CACERT_SUBJECT_C=–

CONFIG_SELFSIGN_CACERT_SUBJECT_ST=State

CONFIG_SELFSIGN_CACERT_SUBJECT_L=City

CONFIG_SELFSIGN_CACERT_SUBJECT_O=openstack

CONFIG_SELFSIGN_CACERT_SUBJECT_OU=packstack

CONFIG_SELFSIGN_CACERT_SUBJECT_CN=ip-192-169-142-127.ip.secureserver.net

CONFIG_SELFSIGN_CACERT_SUBJECT_MAIL=admin@ip-192-169-142-127.ip.secureserver.net

CONFIG_AMQP_BACKEND=rabbitmq

CONFIG_AMQP_HOST=192.169.142.127

CONFIG_AMQP_ENABLE_SSL=n

CONFIG_AMQP_ENABLE_AUTH=n

CONFIG_AMQP_NSS_CERTDB_PW=PW_PLACEHOLDER

CONFIG_AMQP_AUTH_USER=amqp_user

CONFIG_AMQP_AUTH_PASSWORD=PW_PLACEHOLDER

CONFIG_MARIADB_HOST=192.169.142.127

CONFIG_MARIADB_USER=root

CONFIG_MARIADB_PW=7207ae344ed04957

CONFIG_KEYSTONE_DB_PW=abcae16b785245c3

CONFIG_KEYSTONE_DB_PURGE_ENABLE=True

CONFIG_KEYSTONE_REGION=RegionOne

CONFIG_KEYSTONE_ADMIN_TOKEN=3ad2de159f9649afb0c342ba57e637d9

CONFIG_KEYSTONE_ADMIN_EMAIL=root@localhost

CONFIG_KEYSTONE_ADMIN_USERNAME=admin

CONFIG_KEYSTONE_ADMIN_PW=7049f834927e4468

CONFIG_KEYSTONE_DEMO_PW=bf737b785cfa4398

CONFIG_KEYSTONE_API_VERSION=v2.0

CONFIG_KEYSTONE_TOKEN_FORMAT=UUID

CONFIG_KEYSTONE_SERVICE_NAME=httpd

CONFIG_KEYSTONE_IDENTITY_BACKEND=sql

CONFIG_KEYSTONE_LDAP_URL=ldap://12.0.0.127

CONFIG_KEYSTONE_LDAP_USER_DN=

CONFIG_KEYSTONE_LDAP_USER_PASSWORD=

CONFIG_KEYSTONE_LDAP_SUFFIX=

CONFIG_KEYSTONE_LDAP_QUERY_SCOPE=one

CONFIG_KEYSTONE_LDAP_PAGE_SIZE=-1

CONFIG_KEYSTONE_LDAP_USER_SUBTREE=

CONFIG_KEYSTONE_LDAP_USER_FILTER=

CONFIG_KEYSTONE_LDAP_USER_OBJECTCLASS=

CONFIG_KEYSTONE_LDAP_USER_ID_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_USER_NAME_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_USER_MAIL_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_USER_ENABLED_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_USER_ENABLED_MASK=-1

CONFIG_KEYSTONE_LDAP_USER_ENABLED_DEFAULT=TRUE

CONFIG_KEYSTONE_LDAP_USER_ENABLED_INVERT=n

CONFIG_KEYSTONE_LDAP_USER_ATTRIBUTE_IGNORE=

CONFIG_KEYSTONE_LDAP_USER_DEFAULT_PROJECT_ID_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_USER_ALLOW_CREATE=n

CONFIG_KEYSTONE_LDAP_USER_ALLOW_UPDATE=n

CONFIG_KEYSTONE_LDAP_USER_ALLOW_DELETE=n

CONFIG_KEYSTONE_LDAP_USER_PASS_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_USER_ENABLED_EMULATION_DN=

CONFIG_KEYSTONE_LDAP_USER_ADDITIONAL_ATTRIBUTE_MAPPING=

CONFIG_KEYSTONE_LDAP_GROUP_SUBTREE=

CONFIG_KEYSTONE_LDAP_GROUP_FILTER=

CONFIG_KEYSTONE_LDAP_GROUP_OBJECTCLASS=

CONFIG_KEYSTONE_LDAP_GROUP_ID_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_GROUP_NAME_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_GROUP_MEMBER_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_GROUP_DESC_ATTRIBUTE=

CONFIG_KEYSTONE_LDAP_GROUP_ATTRIBUTE_IGNORE=

CONFIG_KEYSTONE_LDAP_GROUP_ALLOW_CREATE=n

CONFIG_KEYSTONE_LDAP_GROUP_ALLOW_UPDATE=n

CONFIG_KEYSTONE_LDAP_GROUP_ALLOW_DELETE=n

CONFIG_KEYSTONE_LDAP_GROUP_ADDITIONAL_ATTRIBUTE_MAPPING=

CONFIG_KEYSTONE_LDAP_USE_TLS=n

CONFIG_KEYSTONE_LDAP_TLS_CACERTDIR=

CONFIG_KEYSTONE_LDAP_TLS_CACERTFILE=

CONFIG_KEYSTONE_LDAP_TLS_REQ_CERT=demand

CONFIG_GLANCE_DB_PW=41264fc52ffd4fe8

CONFIG_GLANCE_KS_PW=f6a9398960534797

CONFIG_GLANCE_BACKEND=file

CONFIG_CINDER_DB_PW=5ac08c6d09ba4b69

CONFIG_CINDER_DB_PURGE_ENABLE=True

CONFIG_CINDER_KS_PW=c8cb1ecb8c2b4f6f

CONFIG_CINDER_BACKEND=lvm

CONFIG_CINDER_VOLUMES_CREATE=y

CONFIG_CINDER_VOLUMES_SIZE=2G

CONFIG_CINDER_GLUSTER_MOUNTS=

CONFIG_CINDER_NFS_MOUNTS=

CONFIG_CINDER_NETAPP_LOGIN=

CONFIG_CINDER_NETAPP_PASSWORD=

CONFIG_CINDER_NETAPP_HOSTNAME=

CONFIG_CINDER_NETAPP_SERVER_PORT=80

CONFIG_CINDER_NETAPP_STORAGE_FAMILY=ontap_cluster

CONFIG_CINDER_NETAPP_TRANSPORT_TYPE=http

CONFIG_CINDER_NETAPP_STORAGE_PROTOCOL=nfs

CONFIG_CINDER_NETAPP_SIZE_MULTIPLIER=1.0

CONFIG_CINDER_NETAPP_EXPIRY_THRES_MINUTES=720

CONFIG_CINDER_NETAPP_THRES_AVL_SIZE_PERC_START=20

CONFIG_CINDER_NETAPP_THRES_AVL_SIZE_PERC_STOP=60

CONFIG_CINDER_NETAPP_NFS_SHARES=

CONFIG_CINDER_NETAPP_NFS_SHARES_CONFIG=/etc/cinder/shares.conf

CONFIG_CINDER_NETAPP_VOLUME_LIST=

CONFIG_CINDER_NETAPP_VFILER=

CONFIG_CINDER_NETAPP_PARTNER_BACKEND_NAME=

CONFIG_CINDER_NETAPP_VSERVER=

CONFIG_CINDER_NETAPP_CONTROLLER_IPS=

CONFIG_CINDER_NETAPP_SA_PASSWORD=

CONFIG_CINDER_NETAPP_ESERIES_HOST_TYPE=linux_dm_mp

CONFIG_CINDER_NETAPP_WEBSERVICE_PATH=/devmgr/v2

CONFIG_CINDER_NETAPP_STORAGE_POOLS=

CONFIG_IRONIC_DB_PW=PW_PLACEHOLDER

CONFIG_IRONIC_KS_PW=PW_PLACEHOLDER

CONFIG_NOVA_DB_PURGE_ENABLE=True

CONFIG_NOVA_DB_PW=1e1b5aeeeaf342a8

CONFIG_NOVA_KS_PW=d9583177a2444f06

CONFIG_NOVA_SCHED_CPU_ALLOC_RATIO=16.0

CONFIG_NOVA_SCHED_RAM_ALLOC_RATIO=1.5

CONFIG_NOVA_COMPUTE_MIGRATE_PROTOCOL=tcp

CONFIG_NOVA_COMPUTE_MANAGER=nova.compute.manager.ComputeManager

CONFIG_VNC_SSL_CERT=

CONFIG_VNC_SSL_KEY=

CONFIG_NOVA_PCI_ALIAS=

CONFIG_NOVA_PCI_PASSTHROUGH_WHITELIST=

CONFIG_NOVA_COMPUTE_PRIVIF=

CONFIG_NOVA_NETWORK_MANAGER=nova.network.manager.FlatDHCPManager

CONFIG_NOVA_NETWORK_PUBIF=eth0

CONFIG_NOVA_NETWORK_PRIVIF=

CONFIG_NOVA_NETWORK_FIXEDRANGE=192.168.32.0/22

CONFIG_NOVA_NETWORK_FLOATRANGE=10.3.4.0/22

CONFIG_NOVA_NETWORK_AUTOASSIGNFLOATINGIP=n

CONFIG_NOVA_NETWORK_VLAN_START=100

CONFIG_NOVA_NETWORK_NUMBER=1

CONFIG_NOVA_NETWORK_SIZE=255

CONFIG_NEUTRON_KS_PW=808e36e154bd4cee

CONFIG_NEUTRON_DB_PW=0e2b927a21b44737

CONFIG_NEUTRON_L3_EXT_BRIDGE=br-ex

CONFIG_NEUTRON_METADATA_PW=a965cd23ed2f4502

CONFIG_LBAAS_INSTALL=n

CONFIG_NEUTRON_METERING_AGENT_INSTALL=n

CONFIG_NEUTRON_FWAAS=n

CONFIG_NEUTRON_VPNAAS=n

CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vxlan

CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vxlan

CONFIG_NEUTRON_ML2_MECHANISM_DRIVERS=openvswitch

CONFIG_NEUTRON_ML2_FLAT_NETWORKS=*

CONFIG_NEUTRON_ML2_VLAN_RANGES=

CONFIG_NEUTRON_ML2_TUNNEL_ID_RANGES=1001:2000

CONFIG_NEUTRON_ML2_VXLAN_GROUP=239.1.1.2

CONFIG_NEUTRON_ML2_VNI_RANGES=1001:2000

CONFIG_NEUTRON_L2_AGENT=openvswitch

CONFIG_NEUTRON_ML2_SUPPORTED_PCI_VENDOR_DEVS=[’15b3:1004′, ‘8086:10ca’]

CONFIG_NEUTRON_ML2_SRIOV_AGENT_REQUIRED=n

CONFIG_NEUTRON_ML2_SRIOV_INTERFACE_MAPPINGS=

CONFIG_NEUTRON_LB_INTERFACE_MAPPINGS=

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet1:br-ex

CONFIG_NEUTRON_OVS_BRIDGE_IFACES=

CONFIG_NEUTRON_OVS_TUNNEL_IF=eth1

CONFIG_NEUTRON_OVS_TUNNEL_SUBNETS=

CONFIG_NEUTRON_OVS_VXLAN_UDP_PORT=4789

CONFIG_MANILA_DB_PW=PW_PLACEHOLDER

CONFIG_MANILA_KS_PW=PW_PLACEHOLDER

CONFIG_MANILA_BACKEND=generic

CONFIG_MANILA_NETAPP_DRV_HANDLES_SHARE_SERVERS=false

CONFIG_MANILA_NETAPP_TRANSPORT_TYPE=https

CONFIG_MANILA_NETAPP_LOGIN=admin

CONFIG_MANILA_NETAPP_PASSWORD=

CONFIG_MANILA_NETAPP_SERVER_HOSTNAME=

CONFIG_MANILA_NETAPP_STORAGE_FAMILY=ontap_cluster

CONFIG_MANILA_NETAPP_SERVER_PORT=443

CONFIG_MANILA_NETAPP_AGGREGATE_NAME_SEARCH_PATTERN=(.*)

CONFIG_MANILA_NETAPP_ROOT_VOLUME_AGGREGATE=

CONFIG_MANILA_NETAPP_ROOT_VOLUME_NAME=root

CONFIG_MANILA_NETAPP_VSERVER=

CONFIG_MANILA_GENERIC_DRV_HANDLES_SHARE_SERVERS=true

CONFIG_MANILA_GENERIC_VOLUME_NAME_TEMPLATE=manila-share-%s

CONFIG_MANILA_GENERIC_SHARE_MOUNT_PATH=/shares

CONFIG_MANILA_SERVICE_IMAGE_LOCATION=https://www.dropbox.com/s/vi5oeh10q1qkckh/ubuntu_1204_nfs_cifs.qcow2

CONFIG_MANILA_SERVICE_INSTANCE_USER=ubuntu

CONFIG_MANILA_SERVICE_INSTANCE_PASSWORD=ubuntu

CONFIG_MANILA_NETWORK_TYPE=neutron

CONFIG_MANILA_NETWORK_STANDALONE_GATEWAY=

CONFIG_MANILA_NETWORK_STANDALONE_NETMASK=

CONFIG_MANILA_NETWORK_STANDALONE_SEG_ID=

CONFIG_MANILA_NETWORK_STANDALONE_IP_RANGE=

CONFIG_MANILA_NETWORK_STANDALONE_IP_VERSION=4

CONFIG_MANILA_GLUSTERFS_SERVERS=

CONFIG_MANILA_GLUSTERFS_NATIVE_PATH_TO_PRIVATE_KEY=

CONFIG_MANILA_GLUSTERFS_VOLUME_PATTERN=

CONFIG_MANILA_GLUSTERFS_TARGET=

CONFIG_MANILA_GLUSTERFS_MOUNT_POINT_BASE=

CONFIG_MANILA_GLUSTERFS_NFS_SERVER_TYPE=gluster

CONFIG_MANILA_GLUSTERFS_PATH_TO_PRIVATE_KEY=

CONFIG_MANILA_GLUSTERFS_GANESHA_SERVER_IP=

CONFIG_HORIZON_SSL=n

CONFIG_HORIZON_SECRET_KEY=33cade531a764c858e4e6c22488f379f

CONFIG_HORIZON_SSL_CERT=

CONFIG_HORIZON_SSL_KEY=

CONFIG_HORIZON_SSL_CACERT=

CONFIG_SWIFT_KS_PW=30911de72a15427e

CONFIG_SWIFT_STORAGES=

CONFIG_SWIFT_STORAGE_ZONES=1

CONFIG_SWIFT_STORAGE_REPLICAS=1

CONFIG_SWIFT_STORAGE_FSTYPE=ext4

CONFIG_SWIFT_HASH=a55607bff10c4210

CONFIG_SWIFT_STORAGE_SIZE=2G

CONFIG_HEAT_DB_PW=PW_PLACEHOLDER

CONFIG_HEAT_AUTH_ENC_KEY=0ef4161f3bb24230

CONFIG_HEAT_KS_PW=PW_PLACEHOLDER

CONFIG_HEAT_CLOUDWATCH_INSTALL=n

CONFIG_HEAT_CFN_INSTALL=n

CONFIG_HEAT_DOMAIN=heat

CONFIG_HEAT_DOMAIN_ADMIN=heat_admin

CONFIG_HEAT_DOMAIN_PASSWORD=PW_PLACEHOLDER

CONFIG_PROVISION_DEMO=n

CONFIG_PROVISION_TEMPEST=n

CONFIG_PROVISION_DEMO_FLOATRANGE=172.24.4.224/28

CONFIG_PROVISION_IMAGE_NAME=cirros

CONFIG_PROVISION_IMAGE_URL=http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

CONFIG_PROVISION_IMAGE_FORMAT=qcow2

CONFIG_PROVISION_IMAGE_SSH_USER=cirros

CONFIG_TEMPEST_HOST=

CONFIG_PROVISION_TEMPEST_USER=

CONFIG_PROVISION_TEMPEST_USER_PW=PW_PLACEHOLDER

CONFIG_PROVISION_TEMPEST_FLOATRANGE=172.24.4.224/28

CONFIG_PROVISION_TEMPEST_REPO_URI=https://github.com/openstack/tempest.git

CONFIG_PROVISION_TEMPEST_REPO_REVISION=master

CONFIG_RUN_TEMPEST=n

CONFIG_RUN_TEMPEST_TESTS=smoke

CONFIG_PROVISION_OVS_BRIDGE=n

CONFIG_CEILOMETER_SECRET=19ae0e7430174349

CONFIG_CEILOMETER_KS_PW=337b08d4b3a44753

CONFIG_CEILOMETER_SERVICE_NAME=httpd

CONFIG_CEILOMETER_COORDINATION_BACKEND=redis

CONFIG_MONGODB_HOST=192.169.142.127

CONFIG_REDIS_MASTER_HOST=192.169.142.127

CONFIG_REDIS_PORT=6379

CONFIG_REDIS_HA=n

CONFIG_REDIS_SLAVE_HOSTS=

CONFIG_REDIS_SENTINEL_HOSTS=

CONFIG_REDIS_SENTINEL_CONTACT_HOST=

CONFIG_REDIS_SENTINEL_PORT=26379

CONFIG_REDIS_SENTINEL_QUORUM=2

CONFIG_REDIS_MASTER_NAME=mymaster

CONFIG_AODH_KS_PW=acdd500a5fed4700

CONFIG_GNOCCHI_DB_PW=cf11b5d6205f40e7

CONFIG_GNOCCHI_KS_PW=36eba4690b224044

CONFIG_TROVE_DB_PW=PW_PLACEHOLDER

CONFIG_TROVE_KS_PW=PW_PLACEHOLDER

CONFIG_TROVE_NOVA_USER=trove

CONFIG_TROVE_NOVA_TENANT=services

CONFIG_TROVE_NOVA_PW=PW_PLACEHOLDER

CONFIG_SAHARA_DB_PW=PW_PLACEHOLDER

CONFIG_SAHARA_KS_PW=PW_PLACEHOLDER

CONFIG_NAGIOS_PW=02f168ee8edd44e4

**********************************************************************

Upon completion connect to external network on Compute Node :-

**********************************************************************

[root@ip-192-169-142-137 network-scripts(keystone_admin)]# cat ifcfg-br-ex

DEVICE=”br-ex”

BOOTPROTO=”static”

IPADDR=”172.124.4.137″

NETMASK=”255.255.255.0″

DNS1=”83.221.202.254″

BROADCAST=”172.124.4.255″

GATEWAY=”172.124.4.1″

NM_CONTROLLED=”no”

TYPE=”OVSIntPort”

OVS_BRIDGE=br-ex

DEVICETYPE=”ovs”

DEFROUTE=”yes”

IPV4_FAILURE_FATAL=”yes”

IPV6INIT=no

[root@ip-192-169-142-137 network-scripts(keystone_admin)]# cat ifcfg-eth2

DEVICE=”eth2″

# HWADDR=00:22:15:63:E4:E2

ONBOOT=”yes”

TYPE=”OVSPort”

DEVICETYPE=”ovs”

OVS_BRIDGE=br-ex

NM_CONTROLLED=no

IPV6INIT=no

[root@ip-192-169-142-137 network-scripts(keystone_admin)]# cat start.sh

#!/bin/bash -x

chkconfig network on

systemctl stop NetworkManager

systemctl disable NetworkManager

service network restart

**********************************************

Verification Compute node status

**********************************************

[root@ip-192-169-142-137 ~(keystone_admin)]# openstack-status

== Nova services ==

openstack-nova-api: inactive (disabled on boot)

openstack-nova-compute: active

openstack-nova-network: inactive (disabled on boot)

openstack-nova-scheduler: inactive (disabled on boot)

== neutron services ==

neutron-server: inactive (disabled on boot)

neutron-dhcp-agent: active

neutron-l3-agent: active

neutron-metadata-agent: active

neutron-openvswitch-agent: active

==ceilometer services==

openstack-ceilometer-api: inactive (disabled on boot)

openstack-ceilometer-central: inactive (disabled on boot)

openstack-ceilometer-compute: active

openstack-ceilometer-collector: inactive (disabled on boot)

== Support services ==

openvswitch: active

dbus: active

Warning novarc not sourced

[root@ip-192-169-142-137 ~(keystone_admin)]# nova-manage version

13.0.0-0.20160329105656.7662fb9.el7.centos

Also install python-openstackclient on Compute

******************************************

Verfication status on Controller

******************************************

[root@ip-192-169-142-127 ~(keystone_admin)]# openstack-status

== Nova services ==

openstack-nova-api: active

openstack-nova-compute: inactive (disabled on boot)

openstack-nova-network: inactive (disabled on boot)

openstack-nova-scheduler: active

openstack-nova-cert: active

openstack-nova-conductor: active

openstack-nova-console: inactive (disabled on boot)

openstack-nova-consoleauth: active

openstack-nova-xvpvncproxy: inactive (disabled on boot)

== Glance services ==

openstack-glance-api: active

openstack-glance-registry: active

== Keystone service ==

openstack-keystone: inactive (disabled on boot)

== Horizon service ==

openstack-dashboard: active

== neutron services ==

neutron-server: active

neutron-dhcp-agent: inactive (disabled on boot)

neutron-l3-agent: inactive (disabled on boot)

neutron-metadata-agent: inactive (disabled on boot)

== Swift services ==

openstack-swift-proxy: active

openstack-swift-account: active

openstack-swift-container: active

openstack-swift-object: active

== Cinder services ==

openstack-cinder-api: active

openstack-cinder-scheduler: active

openstack-cinder-volume: active

openstack-cinder-backup: active

== Ceilometer services ==

openstack-ceilometer-api: inactive (disabled on boot)

openstack-ceilometer-central: active

openstack-ceilometer-compute: inactive (disabled on boot)

openstack-ceilometer-collector: active

openstack-ceilometer-notification: active

== Support services ==

mysqld: inactive (disabled on boot)

dbus: active

target: active

rabbitmq-server: active

memcached: active

== Keystone users ==

+———————————-+————+———+———————-+

| id | name | enabled | email |

+———————————-+————+———+———————-+

| f7dbea6e5b704c7d8e77e88c1ce1fce8 | admin | True | root@localhost |

| baf4ee3fe0e749f982747ffe68e0e562 | aodh | True | aodh@localhost |

| 770d5c0974fb49998440b1080e5939a0 | boris | True | |

| f88d8e83df0f43a991cb7ff063a2439f | ceilometer | True | ceilometer@localhost |

| e7a92f59f081403abd9c0f92c4f8d8d0 | cinder | True | cinder@localhost |

| 58e531b5eba74db2b4559aaa16561900 | glance | True | glance@localhost |

| d215d99466aa481f847df2a909c139f7 | gnocchi | True | gnocchi@localhost |

| 5d3433f7d54d40d8b9eeb576582cc672 | neutron | True | neutron@localhost |

| 3a50997aa6fc4c129dff624ed9745b94 | nova | True | nova@localhost |

| ef1a323f98cb43c789e4f84860afea35 | swift | True | swift@localhost |

+———————————-+————+———+———————-+

== Glance images ==

+————————————–+————————–+

| ID | Name |

+————————————–+————————–+

| cbf88266-0b49-4bc2-9527-cc9c9da0c1eb | derby/docker-glassfish41 |

| 5d0a97c3-c717-46ac-a30f-86208ea0d31d | larsks/thttpd |

| 80eb0d7d-17ae-49c7-997f-38d8a3aeeabd | rastasheep/ubuntu-sshd |

+————————————–+————————–+

== Nova managed services ==

+—-+——————+—————————————-+———-+———+——-+—————————-+—————–+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+—-+——————+—————————————-+———-+———+——-+—————————-+—————–+

| 5 | nova-cert | ip-192-169-142-127.ip.secureserver.net | internal | enabled | up | 2016-03-31T09:59:53.000000 | |

| 6 | nova-consoleauth | ip-192-169-142-127.ip.secureserver.net | internal | enabled | up | 2016-03-31T09:59:52.000000 | – |

| 7 | nova-scheduler | ip-192-169-142-127.ip.secureserver.net | internal | enabled | up | 2016-03-31T09:59:52.000000 | – |

| 8 | nova-conductor | ip-192-169-142-127.ip.secureserver.net | internal | enabled | up | 2016-03-31T09:59:54.000000 | – |

| 10 | nova-compute | ip-192-169-142-137.ip.secureserver.net | nova | enabled | up | 2016-03-31T09:59:55.000000 | – |

+—-+——————+—————————————-+———-+———+——-+—————————-+—————–+

== Nova networks ==

+————————————–+————–+——+

| ID | Label | Cidr |

+————————————–+————–+——+

| 47798c88-29e5-4dee-8206-d0f9b7e19130 | public | – |

| 8f849505-0550-4f6c-8c73-6b8c9ec56789 | private | – |

| bcfcf3c3-c651-4ae7-b7ee-fdafae04a2a9 | demo_network | – |

+————————————–+————–+——+

== Nova instance flavors ==

+—-+———–+———–+——+———–+——+——-+————-+———–+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+—-+———–+———–+——+———–+——+——-+————-+———–+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+—-+———–+———–+——+———–+——+——-+————-+———–+

== Nova instances ==

+————————————–+——————+———————————-+——–+————+————-+—————————————+

| ID | Name | Tenant ID | Status | Task State | Power State | Networks |

+————————————–+——————+———————————-+——–+————+————-+—————————————+

| c8284258-f9c0-4b81-8cd0-db6e7cbf8d48 | UbuntuRastasheep | 32df2fd0c85745c9901b2247ec4905bc | ACTIVE | – | Running | demo_network=90.0.0.15, 172.124.4.154 |

| 50f22f8a-e6ff-4b8b-8c15-f3b9bbd1aad2 | derbyGlassfish | 32df2fd0c85745c9901b2247ec4905bc | ACTIVE | – | Running | demo_network=90.0.0.16, 172.124.4.155 |

| 03664d5e-f3c5-4ebb-9109-e96189150626 | testLars | 32df2fd0c85745c9901b2247ec4905bc | ACTIVE | – | Running | demo_network=90.0.0.14, 172.124.4.153 |

+————————————–+——————+———————————-+——–+————+————-+—————————————+

*********************************

Nova-Docker Setup on Compute

*********************************

# curl -sSL https://get.docker.com/ | sh

# usermod -aG docker nova ( seems not help to set 660 for docker.sock )

# systemctl start docker

# systemctl enable docker

# chmod 666 /var/run/docker.sock (add to /etc/rc.d/rc.local)

# easy_install pip

# git clone -b stable/mitaka https://github.com/openstack/nova-docker

*******************

Driver build

*******************

# cd nova-docker

# pip install -r requirements.txt

# python setup.py install

********************************************

Switch nova-compute to DockerDriver

********************************************

vi /etc/nova/nova.conf

compute_driver=novadocker.virt.docker.DockerDriver

***********************************

Next one on Controller

***********************************

mkdir /etc/nova/rootwrap.d

vi /etc/nova/rootwrap.d/docker.filters

[Filters]

# nova/virt/docker/driver.py: ‘ln’, ‘-sf’, ‘/var/run/netns/.*’

ln: CommandFilter, /bin/ln, root

****************************************************

Nova Compute Service restart on Compute

****************************************************

# systemctl restart openstack-nova-compute

****************************************

Glance API Service restart on Controller

****************************************

vi /etc/glance/glance-api.conf

container_formats=ami,ari,aki,bare,ovf,ova,docker

# systemctl restart openstack-glance-api

Build on Compute GlassFish 4.1 docker image per

http://bderzhavets.blogspot.com/2015/01/hacking-dockers-phusionbaseimage-to.html and upload to glance :-

[root@ip-192-169-142-137 ~(keystone_admin)]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

derby/docker-glassfish41 latest 615ce2c6a21f 29 minutes ago 1.155 GB

rastasheep/ubuntu-sshd latest 70e0ac74c691 32 hours ago 251.6 MB

phusion/baseimage latest 772dd063a060 3 months ago 305.1 MB

larsks/thttpd latest a31ab5050b67 15 months ago 1.058 MB

[root@ip-192-169-142-137 ~(keystone_admin)]# docker save derby/docker-glassfish41 | openstack image create derby/docker-glassfish41 –public –container-format docker –disk-format raw

+——————+——————————————————+

| Field | Value |

+——————+——————————————————+

| checksum | dca755d516e35d947ae87ff8bef8fa8f |

| container_format | docker |

| created_at | 2016-03-31T09:32:53Z |

| disk_format | raw |

| file | /v2/images/cbf88266-0b49-4bc2-9527-cc9c9da0c1eb/file |

| id | cbf88266-0b49-4bc2-9527-cc9c9da0c1eb |

| min_disk | 0 |

| min_ram | 0 |

name | derby/docker-glassfish41 |

| owner | 677c4fec97d14b8db0639086f5d59f7d |

| protected | False |

| schema | /v2/schemas/image |

| size | 1175030784 |

| status | active |

| tags | |

| updated_at | 2016-03-31T09:33:58Z |

| virtual_size | None |

| visibility | public |

+——————+——————————————————+

Now launch DerbyGassfish instance via dashboard and assign floating ip

Access to Glassfish instance via FIP 172.124.4.155

root@ip-192-169-142-137 ~(keystone_admin)]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

70ac259e9176 derby/docker-glassfish41 “/sbin/my_init” 3 minutes ago Up 3 minutes nova-50f22f8a-e6ff-4b8b-8c15-f3b9bbd1aad2

a0826911eabe rastasheep/ubuntu-sshd “/usr/sbin/sshd -D” About an hour ago Up About an hour nova-c8284258-f9c0-4b81-8cd0-db6e7cbf8d48

7923487076d5 larsks/thttpd “/thttpd -D -l /dev/s” About an hour ago Up About an hour nova-03664d5e-f3c5-4ebb-9109-e96189150626

Posted by dbaxps

Posted by dbaxps